PTP Notifications Overview¶

StarlingX provides ptp-notification to support applications that rely on

PTP for time synchronization and require the ability to determine if the system

time is out of sync. ptp-notification provides the ability for user applications

to query the sync state of hosts as well as subscribe to push notifications for

changes in the sync status.

StarlingX provides a Sidecar, which runs with the hosted application in the same pod and communicates with the application via a REST API.

PTP-notification consists of two main components:

The

ptp-notificationsystem application can be installed on nodes using PTP clock synchronization. This monitors the various time services and provides the v1 and v2 REST API for clients to query and subscribe to.The

ptp-notificationsidecar. This is a container image which can be configured as a sidecar and deployed alongside user applications that wish to use theptp-notificationAPI. User applications only need to be aware of the sidecar, making queries and subscriptions via its API. The sidecar handles locating the appropriateptp-notificationendpoints, executing the query and returning the results to the user application.

StarlingX supports the following features:

Provides the capability to enable application(s) subscribe to PTP status notifications and pull for the PTP state on demand.

Uses a REST API to communicate PTP notifications to the application.

Enables operators to install the ptp-notification-armada-app, Sidecar container, and the application supporting the REST API. For more information, see https://docs.starlingx.io/api-ref/ptp-notification-armada-app/index.html.

Supports the ptp4l module and PTP port that is configured in Subordinate mode (Secondary mode).

The PTP notification Sidecar container can be configured with a Liveness Probe, if required. See, Liveness Probe for more information.

Differences between v1 and v2 REST APIs¶

Use of the v1 and v2 APIs is distinguished by the version identifier in the URI when interacting with the sidecar container. Both are always available. For example:

v1 API

/ocloudNotifications/v1/subscriptions

/ocloudNotifications/v2/subscriptions

The v1 API is maintained for backward compatibility with existing deployments. New deployments should use the v2 API.

v1 Limitations

Support for monitoring a single

ptp4linstance per host - no other services can be queried/subscribed to.API does not conform to the O-RAN.WG6.O-Cloud Notification API-v02.01 standard.

See the respective ptp-notification v1 and v2 document subsections for

details on the behaviour.

Integrated Containerized Applications

Applications that rely on PTP for synchronization have the ability to retrieve the relevant data for the status of the monitored service. User applications may subscribe to notifications from multiple service types and from multiple separate nodes.

Once an application subscribes to PTP notifications it receives the initial data that shows the service state, and receives notifications when there is a state change to the sync status and/or per request for notification (pull).

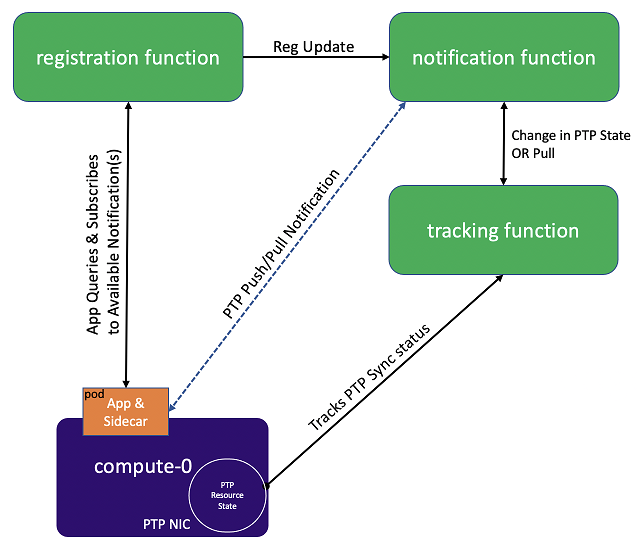

The figure below describes the subscription framework for PTP notifications.

PTP Notification Helm App High CPU usage Limitation¶

When the liveness probe is configured, the PTP Notification and PTP Notification Client applications can cause a high CPU load. This issue was first reported on https://github.com/jfrog/charts/issues/1657.

This issue occurs when using exec-style probes, and can be identified by the following chart block:

livenessProbe:

exec:

command:

- curl

- http://127.0.0.1:8181/health

Workaround: To prevent this issue, edit the application’s daemonset chart or the application’s deployment chart, and replace the liveness portion using the following steps:

Procedure

Remove the

existing execcommand block:{{- if .Values.location.endpoint.liveness }} livenessProbe: exec: command: - curl - http://127.0.0.1:8080/health failureThreshold: 3 initialDelaySeconds: 30 periodSeconds: 5 successThreshold: 1 timeoutSeconds: 5 {{ end }}Replace the

existing execcommand block with thehttpGetblock:{{- if .Values.location.endpoint.liveness }} livenessProbe: failureThreshold: 3 httpGet: path: /health port: {{ .Values.location.endpoint.port }} scheme: HTTP initialDelaySeconds: 60 periodSeconds: 3 successThreshold: 1 timeoutSeconds: 3 {{ end }}Upload and apply the new application.

~(keystone_admin)]$ system application-upload /usr/local/share/applications/helm/ptp-notification-xx.xx-xxx.tgz ~(keystone_admin)]$ system helm-override-update ptp-notification ptp-notification notification --values notification-override.yaml ~(keystone_admin)]$ system application-apply ptp-notification

Verify that the pod can respond to the liveness probe.

Get the pod’s IP address using the kubectl get pods -n <namespace> -o wide -w command. Then use curl to verify that the pod can respond to the liveness probe.

curl http://<pods-ip-address>:8080/health

It must respond with:

{“health”: true}

Liveness Probe

The PTP notification Sidecar container can be configured with a Liveness probe, if required. You can edit the Sidecar values in the deployment manifest to include these parameters.

Note

Port and timeout values can be configured to meet user preferences.

The periodSeconds and timeoutSeconds values can be adjusted based

on system performance. A shorter value places more resource demand on the

Sidecar pod, so longer values can improve pod stability.

cat <<EOF >

items:

spec:

template:

spec:

containers:

livenessProbe:

httpGet:

path: /health

port: 8080

scheme: HTTP

failureThreshold: 3

initialDelaySeconds: 30

periodSeconds: 5

successThreshold: 1

timeoutSeconds: 5

EOF

Container images and API compatibility

The ptp-notification provides a v1 API for backwards compatibility with

client applications deployed on StarlingX r9.0, as well as a v2 API for

O-RAN Spec Compliant Timing notifications. By default, ptp-notification

deploys two notificationservice-base containers to support these APIs.

Users must decide which API they will use by deploying the appropriate

notificationclient-base image as a sidecar with their consumer application.

The v1 API uses

starlingx/notificationservice-base:stx.9.0-v2.1.1Compatible with the image:

starlingx/notificationclient-base:stx.5.0-v1.0.4

The v2 API uses

starlingx/notificationservice-base-v2:stx.9.0-v2.1.1Compatible with the image:

starlingx/notificationclient-base:stx.9.0-v2.1.1

Upgrades of StarlingX r9.0 to the next patch will automatically upgrade the

ptp-notification application and deploy both the v1 and v2 API containers.

Consumer applications determine which API they interact with based on the

version of notificationclient-base that is deployed along side their

application.